This webpage is used to showcase/backup some of my coding projects and will get progressively cooler as I put time into it, but havent yet

Feel free to also check out http://www.awright2009.com

Email: resume@awright2009.com

So, having an electrical engineering degree, I've felt like I should be able to make a PCB with a simple processor (if not make a simple integrated circuit myself), but have never really been taught board layout or what software packages to use (I guess board layout isn't typically taught in EE degree plans). When working at DRS Technologies, the guys over there used cadence to re-layout reference TI designs, but I wasn't exposed to it too much other than maybe seeing the board layout software in passing and being present with new board bring up and maybe hearing about Jabil and Probasco regarding PCB manufacturing and mechanical designs in meetings frequently. Although I did look at schematics pretty heavily for my own needs with software. Maybe a missed opportunity there to learn more from the EE guys. Nowadays, in the world of youtube and cheap chinese manufacturing from JLCPCB and PCBWay I would say making your own PCB is much more accessible to everyone.

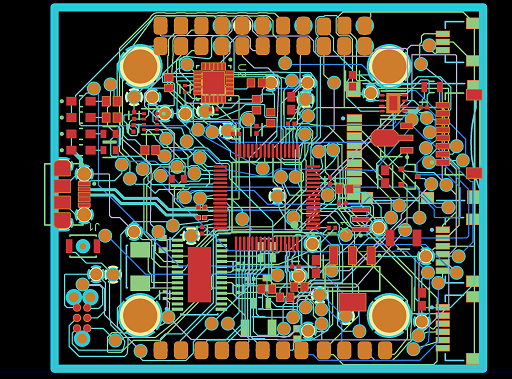

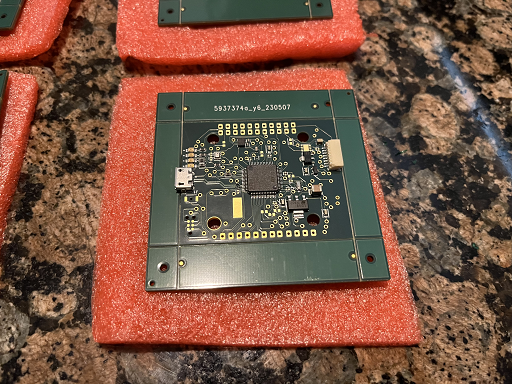

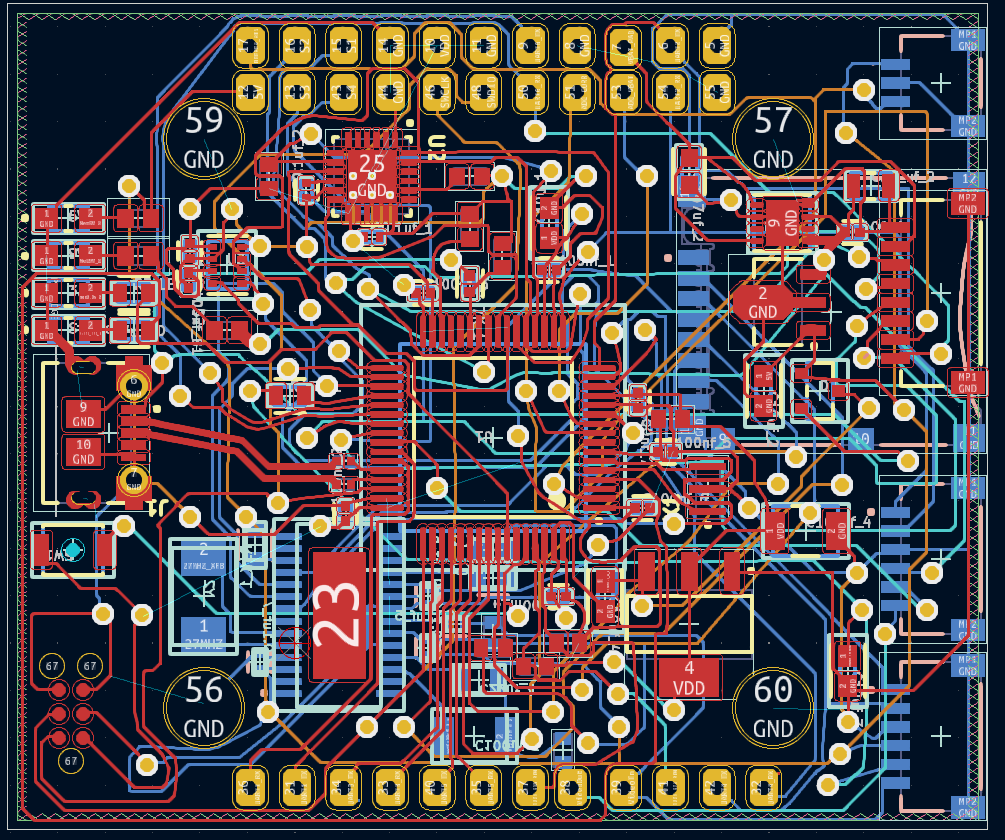

With the help of Phil's Lab youtube channel and free Altium Designer student licenses from my graduate degree, I've thrown together a 6-layer STM32F7 based flight controller that is still a work in progress. I ordered five boards of the first rev, and I noticed during their engineer review they showed that some capacitors and resistors were missing that should have been there. (net name naming confusion maybe? Maybe BOM parts not in stock?) Rather than going back and forth a bit more with them I just had those versions made. Which means I had to do some soldering of a lot of 0402 capacitors and a few 0603 resistors. Which isn't bad if you have the right tools. I would recommend sticking to 0603, if possible, though and don't go below 0402 without a pick and place machine doing things for you.

The first board my soldering wasn't perfect, and I missed one solder bridge on inspection which killed the BAT54C Schottky diode. (Will have to replace that with my hot air station eventually) The second board I soldered well enough to get the processor programmed. The i2c bus works, the SPI bus works mostly (more on that later), programming via USB works, programming via ST-Link v2 SWD works (which also allows debugging), but eventually I noticed I connected my SPI bus devices backwards (SDI-SDI and SDO-SDO, where it should be SDI-SDO and SDO-SDI. I think I can blue wire those well enough for testing using a pen grinder similar to northridgefix before making a revision 2 board. The SD card works, as the SPI bus pins on there were labeled differently and I didn't fall into the same hole. I've since got a third board soldered up that works, and after getting tired of using some tweezer for the DFU boot button I recently purchased some of the missing buttons from Digikey and will solder those up.

For some reason betaflight (the software intended to run on the flight controller) locks up on startup, and I'm still debugging that issue, first glance looks like noise on a line is coming in as telemetry input from the motors. For more testing I picked up a STM32F4 "feather" from adafruit that comes up with betaflight (if you program the generic STM32F405 image) as a reference point and bought external versions of the same sensors I'm using on the board to help nail down any configuration needed as well as to help narrow down any differences that may cause issues in the design. Right now, I can talk to the BMP280 sensor over SPI using the external device and my own code base, but betaflight still doesn't see it for whatever reason.

If you aren't familiar with betaflight, it is the firmware/software that runs most FPV quadcopters. which are essentially composed of a carbon fiber frames, four brushless motors, an ESC (Half-bridge brushless motor driver), and the flight controller. The flight controllers are mainly composed of a STM32 F4 or F7, and a MEMS gyro (BMI270, MPU6000, or ICM206XX), they also provide a lot of UARTS for interfacing with radio protocols such as ELRS or adding GPS/Compass or similar peripherals. Although occasionally you'll see a barometer such as the BMP280 on my board used to provide more accurate altitude information than GPS

In terms of making a CMOS IC, the cost is still too prohibitively expensive to do one on a hobbyist level I would say. But generally EDA software from Synopsys, Mentor Graphics, or Cadence generates the various regions of silicone and output them to a DDS file that you can view pretty easily in something like kLayout. Think of the DDS files as the equivalent to gerber files for a PCB. That said I think FPGA's and VHDL are a nice substitution from a cost perspective compared to having a FAB make your custom IC.

And, if you want to make a PCB, you don't have to use Altium Designer (which is very expensive) you can use KiCAD, which is free, and it imports altium designs pretty easily I've found so you could even load up this design with it.

http://github.com/akw0088/FlightController/ -- Note: the software directory contains code mainly for board / sensor checkout. The intended firmware is betaflight of course.

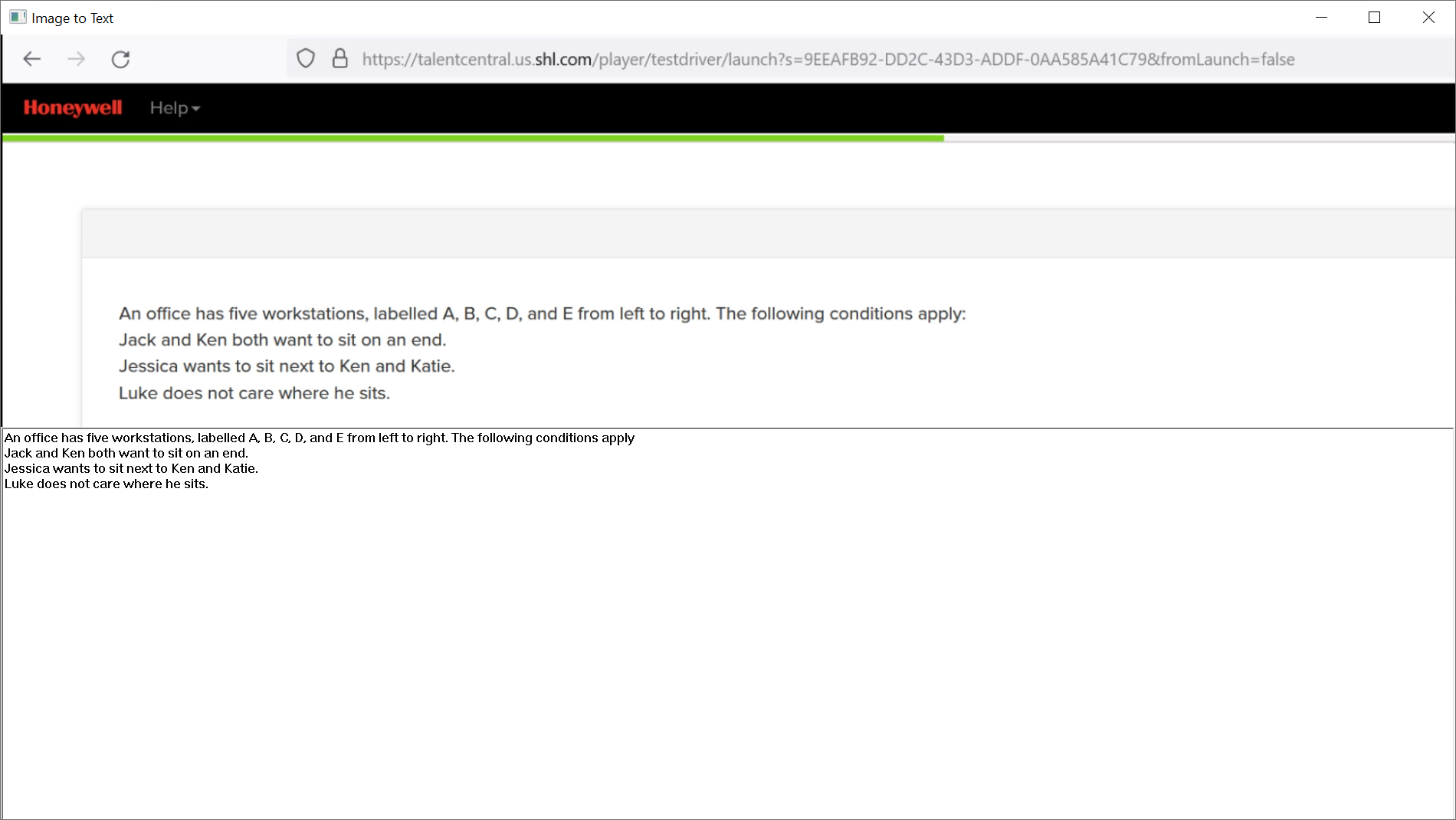

So, I had to take an online assessment which had a lot of "Constraint Satisfaction Problems", which are questions like:

Jack handles all shipments on Tuesdays.

Jack never handles shipments of cleaning supplies.

Chemical supplies arrive later in the week than cleaning supplies.

Sometimes Jack handles shipments of engine parts.

Which statements are True?

Engine parts arrive earlier in the week than chemical supplies.

Chemical supplies arrive on Wednesday.

Engine parts and painting supplies arrive on the same day.

Cleaning supplies do not arrive on Tuesdays.

Chemical supplies arrive on Thursday.

Which, if you are like me, after about 3 or 4 of these I'm like screw this I'm typing it into ChatGPT. Anyway, so you couldnt copy paste the text, so I had to type it out. I'm not sure if the webpage blocked copying, or it seems firefox had a bug recently which prevented copying text around the same time, but either way I could not copy paste the text. So, I thought, it would be nice to have an application that takes image text and converts it to ascii text, so that is what this is. This app will act like a magnifier app and is very similar to the microsoft magnifier sample, but with the added benefit of allowing you to hold control and click, to scan the magnified image for text and place the ascii in an edit control down below. The scaling and cropping aren't perfect, some weird issues there, the app will save out.png and cropped.bmp to show the full screen capture and the cropped capture that was actually fed into the tesseract ocr library. I'll go back and fix the zooming with the mouse wheel and cropping to make it perfect later, (not sure if the crop works when zoomed) but it seems functional now and I was happy to get a copy out there so I dont lose what is working so far. The tesseract ocr library has a lot of dependencies, which I build with vcpkg and included in the src zip file to make building again easier. You also need to make the application DPI aware in the manifest or things wont crop correctly when the desktop DPI is not set to 100%. To get the text out I tended to press the context menu key on the right side of the right windows key, but you could probably just right click and hit copy too slightly off screen. Issue being that the magnifier app will always be on top, so it kind of covers things. I may replace the edit control with something a bit more sophisticated as I figured it would be easier to copy paste out of there than it is. Sadly I did not pass the assesment. :( -- But if I had this app I probably would have

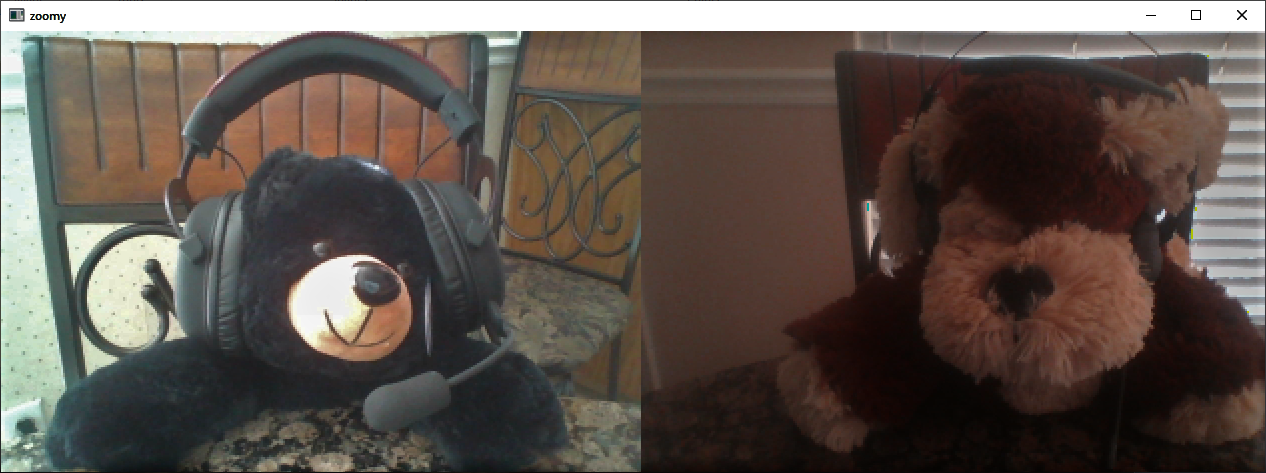

I thought to myself during this pandemic, I bet I could make Zoom. You know, the video conferencing app? So, why not. This, which I creatively dubbed "Zoomy", just supports two people, each side has a listening socket and connects to the other peer to peer style. Video is over TCP, audio is using another pair of sockets over UDP. The Voice Over IP (audio) is using the opus codec and OpenAL, which works really nice. I already had VoIP implemented in my graphics engine, so you would think adding it here would be quick and easy. I did too, but it wasn't. The video itself is captured using the old style "Video for Windows" API's, the resolution is 320x240 upscaled to 640x480. The capture actually uses YUYV format, so the data transfer is actually 320*240*2 bytes (150kb per frame), which isnt too bad. Since each side requires a listening socket you'll need to open things up in your firewall. One could make a server side app that just relays video, but too much trouble for something like this I think.

Real zoom uses H.264 and a similar resolution I hear. Using a video encoder is over kill for a simple app I think, but it could be added easily enough. But I probably sunk too much time into this already over the past few days. If I were to use a video encoder I wouldn't want anything GPU assisted, so software only. I would probably use ffmpeg's libavcodec, using H.264 or VP9 sound nice, but probably dont meet the real time requirement with a software encoder. Even JPEG or MPEG2 would be an improvement I think. Code is on my github here: http://github.com/akw0088/zoomy/

Using the same principals it shouldn't be too hard to make a remote desktop type app using the same bitmap streaming. So I was thinking afterward make a copy called roomy or something for remote desktop type remote control, maybe just add mouse support and force on screen keyboard usage if people really want to type things. Could also make the server side hidden making it sort of a payload or something to spook your friends. Or add key logging and all kinds of stuff that would probably get it marked as spyware or something

I had to borrow one stuffed animal because I didn't have two :p

(They are discussing how you measure the depth of the ocean using an apple)

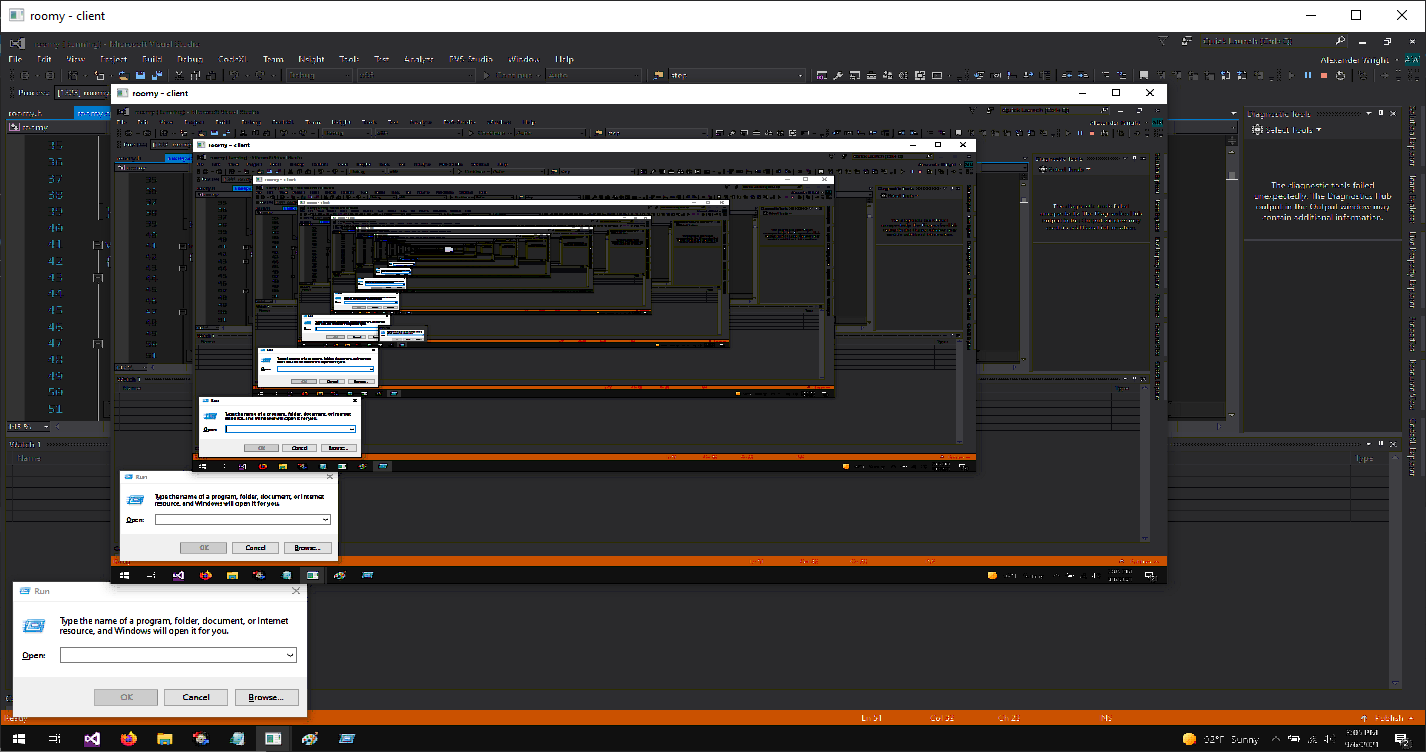

So... about that roomy idea. This is the remote desktop app, it still needs work, but now supports the mouse and keyboard, but be careful as the screen updates slightly slowly. Started with just the idea of streaming webcam bitmaps being pretty similar to streaming desktop bitmaps. Getting a viewer working wasn't too bad with the initial code from zoomy. Once that worked decently I added the mouse support first, which was a bit of effort, but not the end of the world. I originally wasn't going to add the keyboard, but actually the keyboard was mind blowingly easy to add (just a keycode and a up/down state). Run the binary in server mode on the target machine with listen enabled, then on a different machine, mark server and listen off and set the ip to the comptuer running the server. It should then connect and start displaying the remote machines desktop. Be sure to allow the app through the windows firewall too, the server can run hidden, so kill it in task manager if you started it and it disappeared. You can setup the server to connect out and the client to listen if you'd like. Tested it over the net without compression and it works pretty good through my VPN. (Although I'm still amazed that I can VPN, wake on LAN my PC, remote desktop to it, do things, shut it down, disconnect VPN, all from my laptop from any location) Feel free to try it out, but not quite as nice as VNC or RDP (remote desktop) yet...

The screen shot is from running the client and server on the same machine, you get a hall of mirrors type effect, as it captures a screen shot of the window, who then displays it, then captures again etc. You'll see this same sort of thing with zoom desktop sharing too. But I thought it looked interesting. Second screenshot is of google, it's just the window screen shot, so the start menu you see is actually from the remote computer. (Which is true for the first screen shot too actually)

Oh yeah, github link here: http://github.com/akw0088/roomy/

For my Electrical Engineering senior design project our subteam was tasked with transmitting digital data from a microcontroller to a remote location for analysis. The data being transmitted is filtered EKG (Heart Beat) data from a microcontroller connected via contactless sensors to a marathon runner. Cardiac arrest can occur when running long distances and the idea is to prevent this from occurring by monitoring for irregular heart beats.

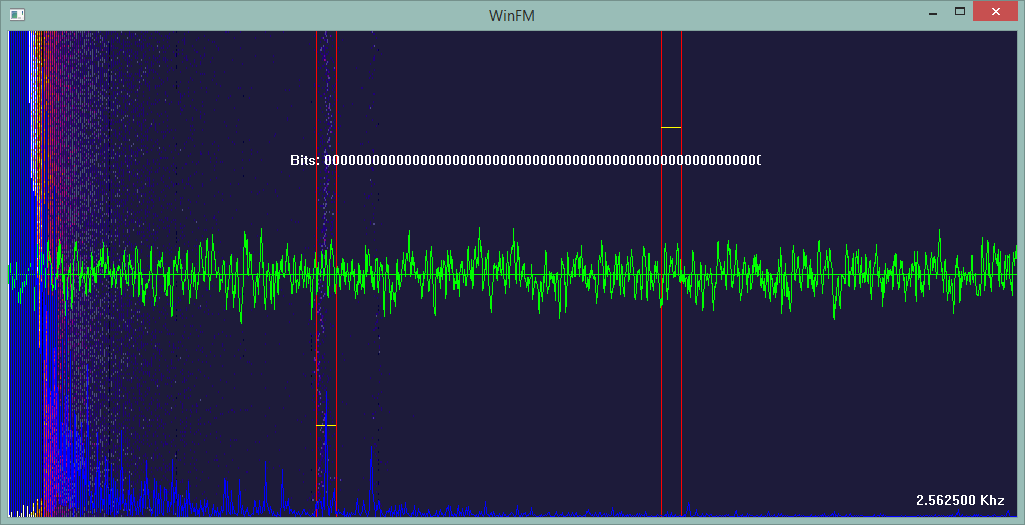

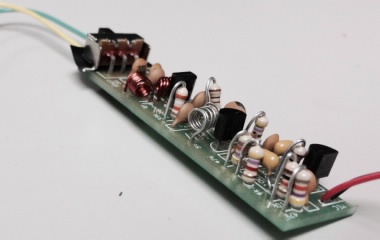

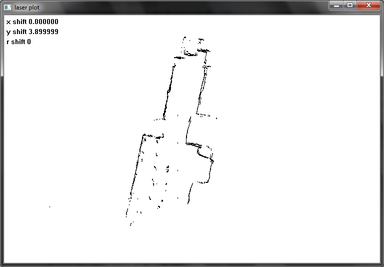

Previous work used commercial off the shelf blue tooth communication chips, but were limited to short transmission distances. Our group (Mohammed Alsadah and Me) decided to perform Binary Frequency Shift Keying over FM using TTL level serial data from the microcontroller located on the runner's shirt. For FM reception we took advantage of the RTL2832U usb chip that can be used as a general software defined radio receiver. Note: This was a single semester project, for the first semester we did a EEG controlled (brain wave) robotic hand, but this one is cooler/more EE related imho.

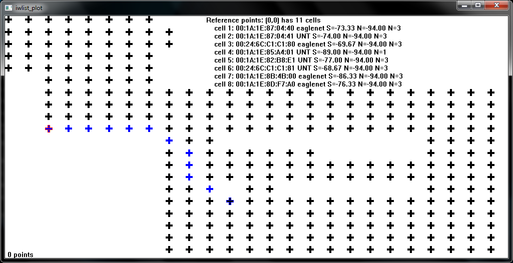

For my BSCE senior design project our team was tasked with determining the location of robots using the school's wifi network and to have the robots meet when given a command to do so. We were provided four acroname garcia robots which all contain a "gumstix" embedded computer on a chip that runs linux complete with a wifi expansion module for connectivity.

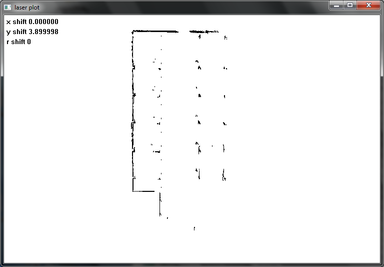

After reading various graduate thesis papers about wifi localization we decided to use what is known as the fingerprinting method. The idea is that you create a database of wireless signal strength readings at various reference points and compare the current location's signal to the database. The "closest" point in terms of signal distance is considered the correct location.

In order to accomplish this, we created various tools to view database information, visualize signal information, etc in addition to the code required for localization and meeting.

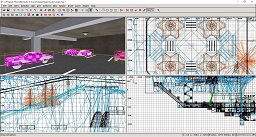

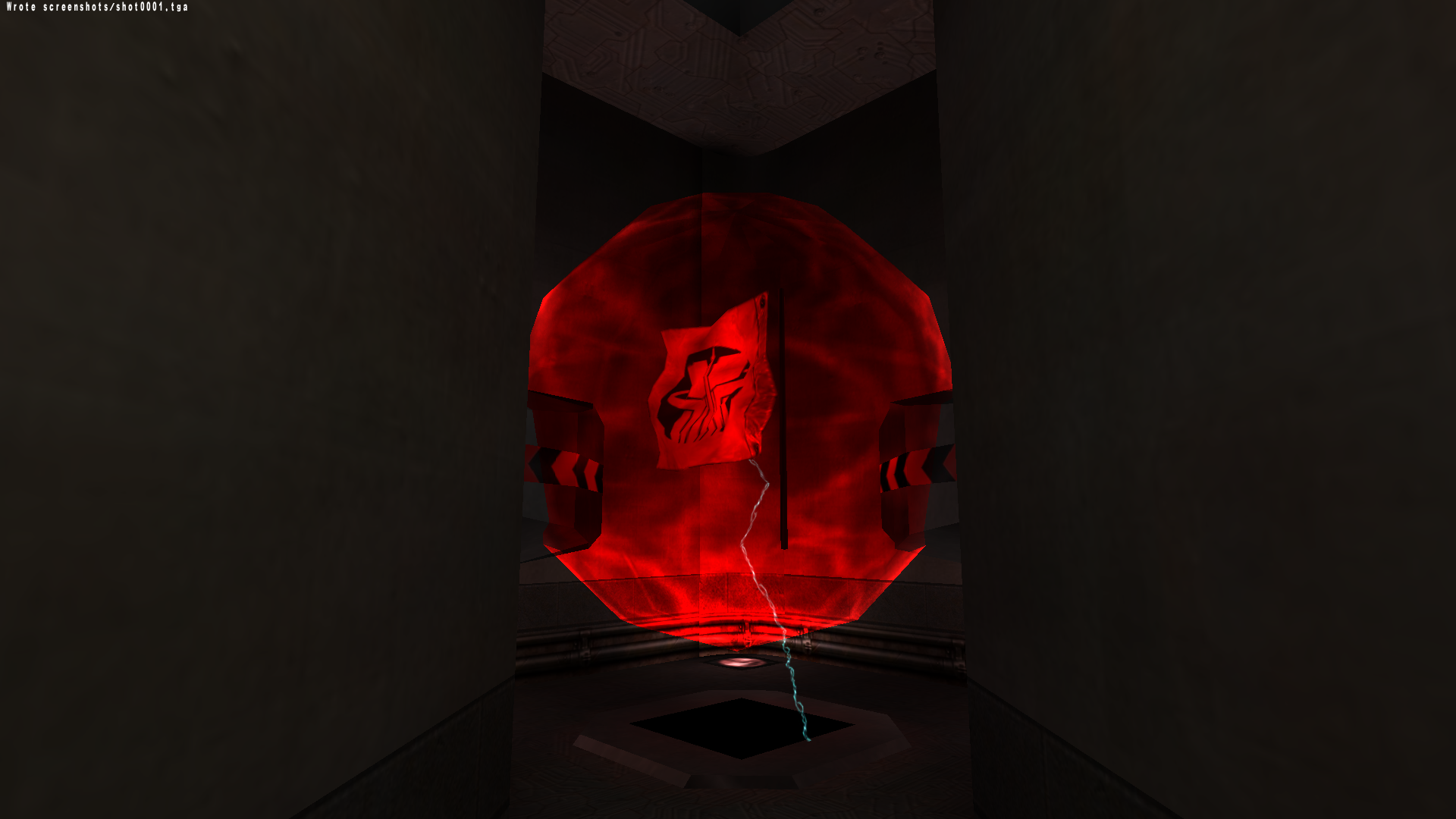

altEngine2 is the current version of my cross platform 3d engine that I work on whenever I have long periods of free time. It makes use of q3 formatted bsp maps.

Update: Changed quite a bit in 2018 or so, now supports OpenGL 4.4, D3D11, and software rendering. (perspective correct texture mapping, mipmapping with bilinear filtering) Added two more recent videos below, had some issue with nvidia shadow play recording properly in full screen, so left them windowed. Downloads page has dates / changelog if interested. (click OldVersions link)

Wrote this for a compilers course. It makes use of flex for parsing the input program into tokens and bison to perform the shift reduction of grammars. What did I do you ask? Well I made everything that flex/bison needed to work and wrote the code generation code. This was done in three weekends, the assembly generated is intel style assembly (AT&T style assembly can rot and die) The gnu assembler can assemble intel style x86 assembly easily with a few flags. So the final output can and does work on both windows and linux. The babel language was created by Professor Sweeny, it's basic language, but has all the requirements (loops, if statments, functions, recursion etc)

I really fought the idea of jumping to the end of a function to allocate temporary storage stack space and then jmping back, which is inefficient and therefore morally wrong for me to do as a programmer. (impossible to avoid this with single pass compilation) But I probably should of done it that way anyway as it gets complicated if you dont. I stored all temps on the stack. If anything this should serve as a quick example of how to use flex and bison. [Professor is a linux guy, so I used bzip2]

These are projects from an EE digital logic project course. The code should all compile and work in Xilinx and run on a spartan 3e board, but I'm not sure if these are the latest versions we used as I pulled them out of old emails. Mainly have these here in case I want to use the spartan board I bought again.

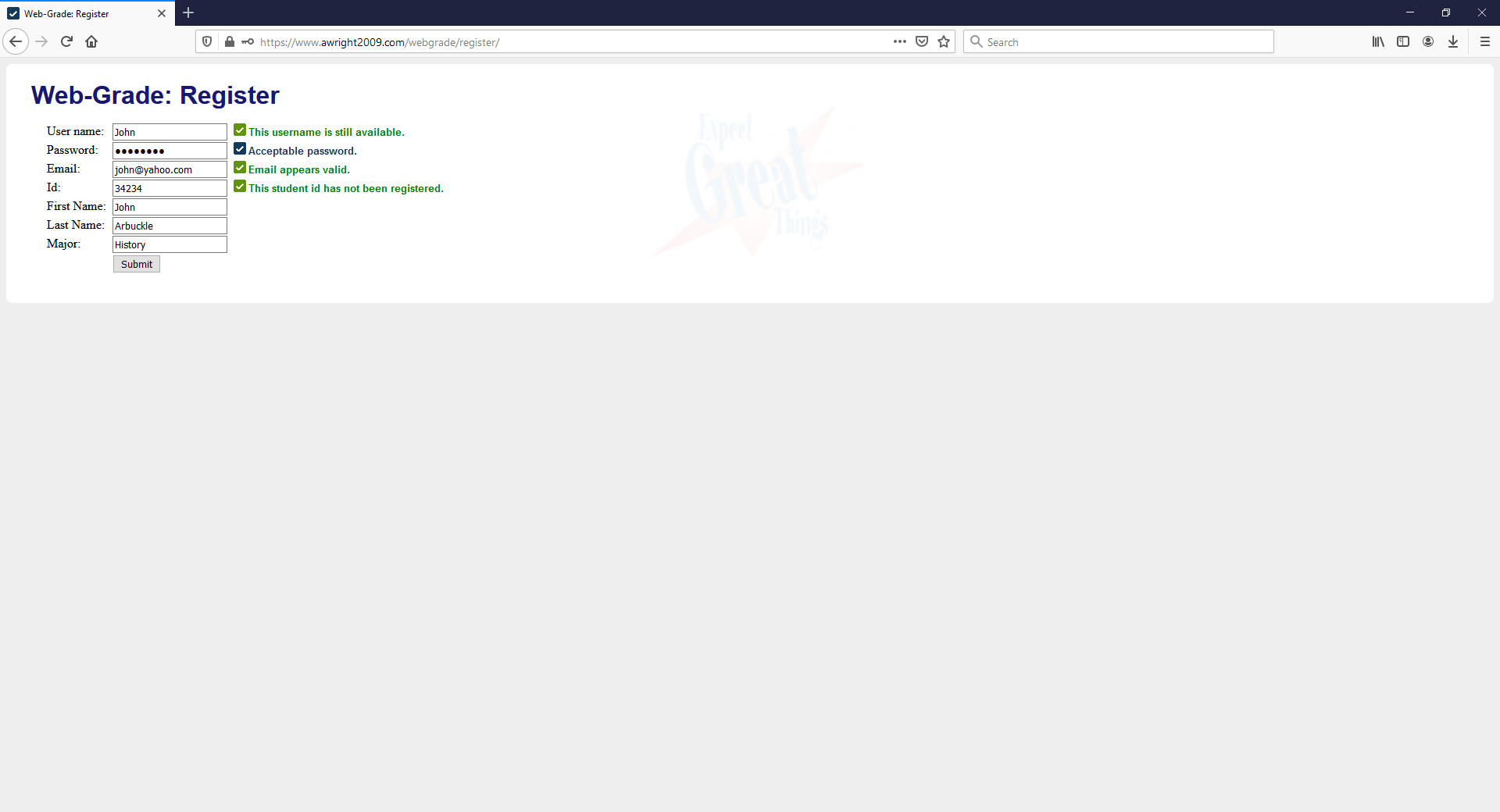

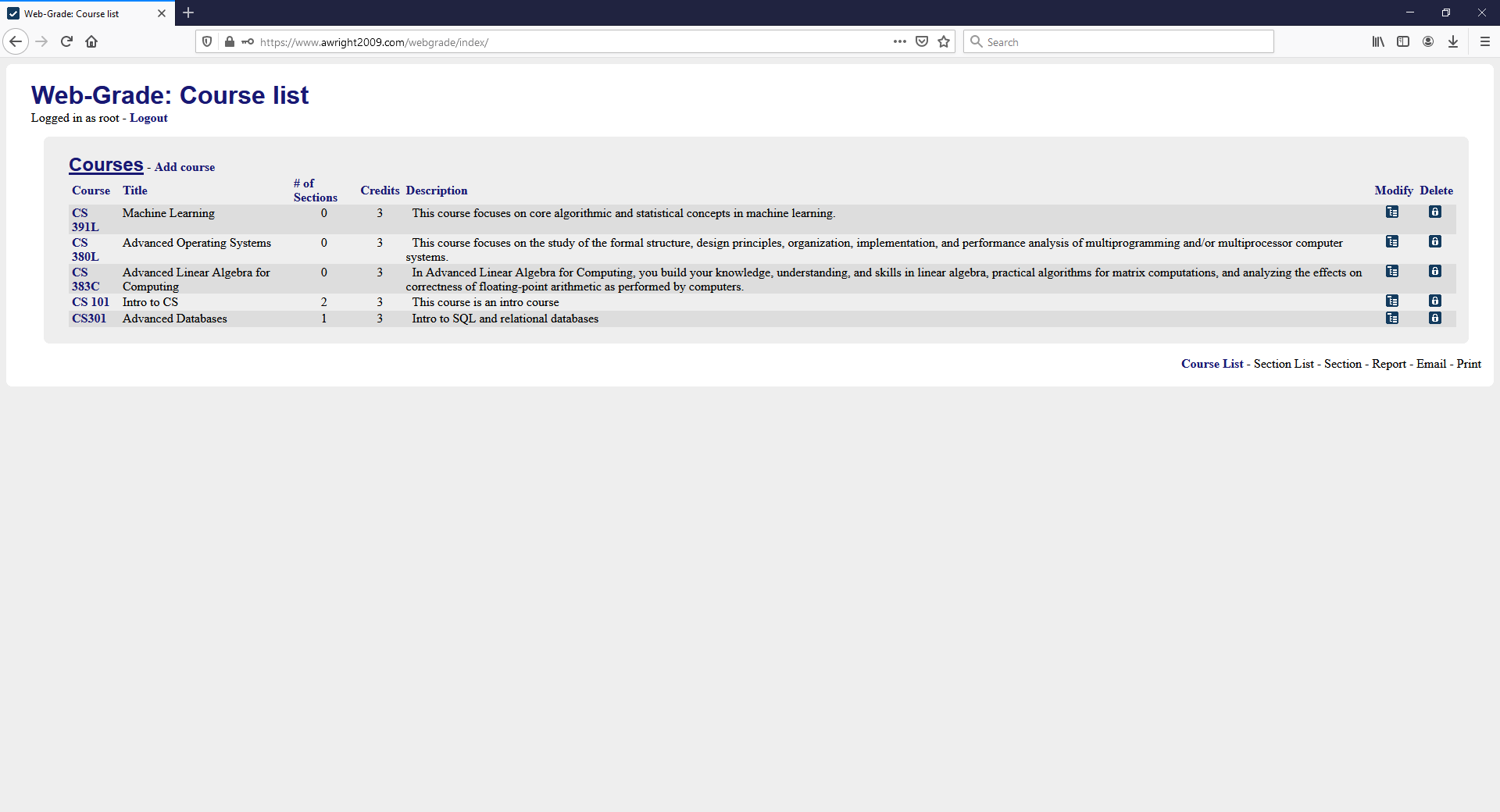

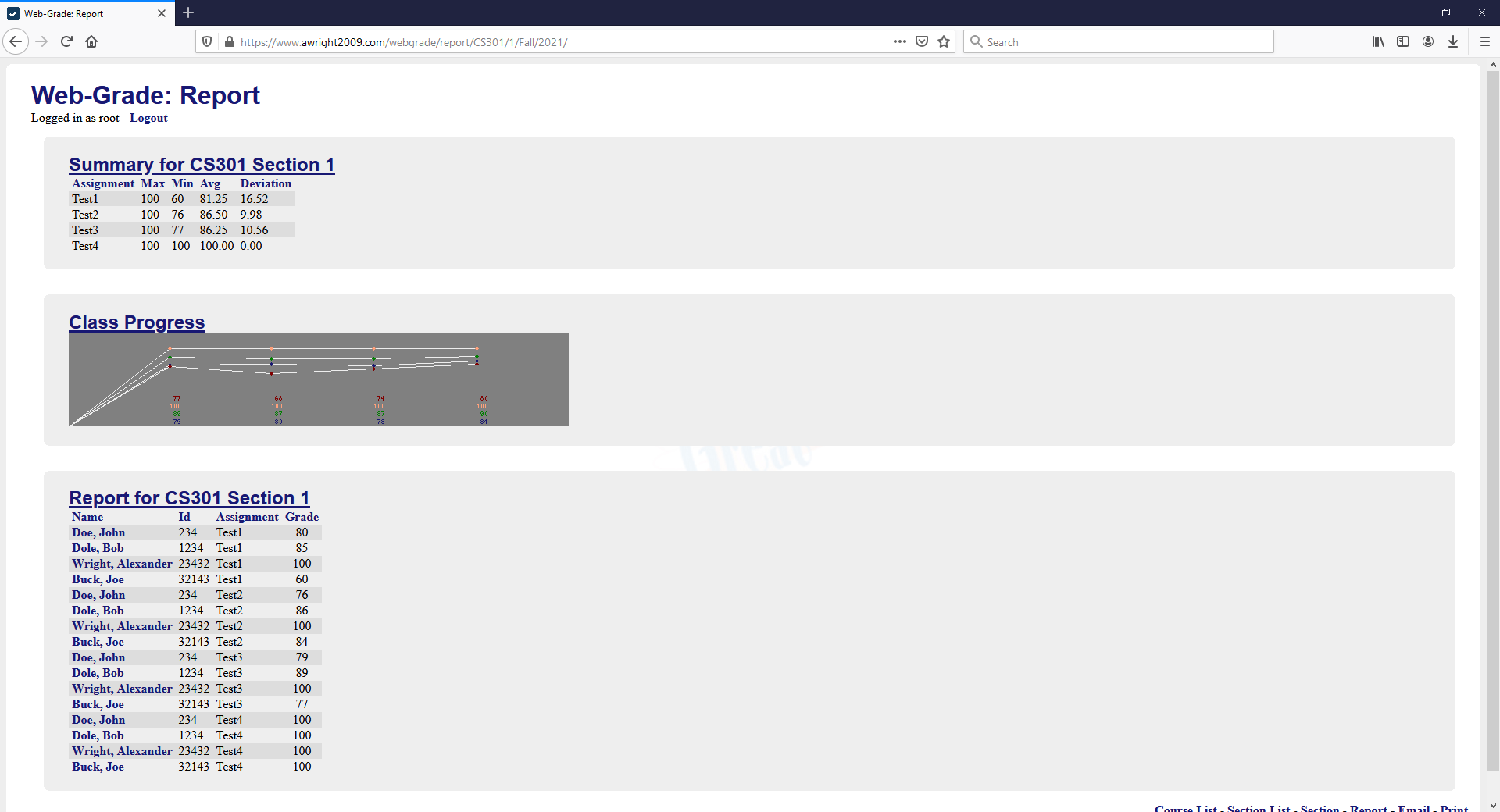

WebGrade is a university course management system which was a team project for a Fundamentals of Software Engineering course. I was the team leader of a group of three other students and also acted as lead developer. WebGrade allows an administrator or student to login to manage courses or view grades respectively. It is xhtml strict compliant and makes use of content / presentation division via cascading style sheets. Download it's source code below, username and password is root.

Download WebGrade - YouTubealtEngine is a first version of my engine. This one would be C instead of C++ and is pretty bare bones in comparison, but only copy I had laying about. I started using a local git repo in 2009 on a server before moving to github in 2012, and was just going without source control prior to that. It runs on windows and X windowing systems. (Runs fine on Linux and Mac OSX with X Window extensions installed.)

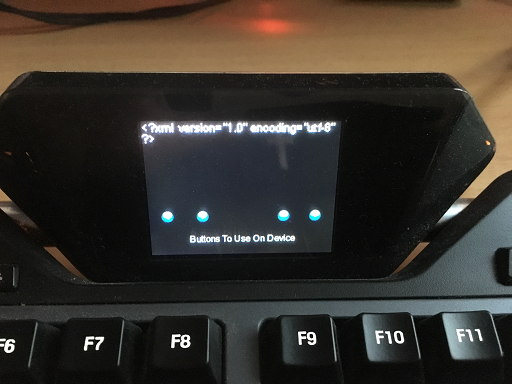

Clipmon is a LCD clipboard monitor for the Logitech G15 Keyboard. (still works on the newer G19 keyboard released 3 years later) It is written in C and makes use of the Windows API and low level logitech lcd sdk (Logitech later released a high level C++ API.) Source code is included with the binaries, I had to write my own word wrapping code because of difficulties using a higher level winapi text function and took a different (ie: weird) approach with exception handling. I wrote this thing in roughly 6 hours and like using it on occasion.

Like clipMon, ircMon is a Internet Relay Chat monitor for the Logitech G15 Keyboard. It is written in C and makes use of the Windows API, Winsock, and the same low level logitech lcd sdk. Source is not included as it isnt pretty and the irc protocol parser needs work. (IE: It will crash on you.) It worked well enough for me to lose interest in it. -- Added the source code, cause why not, I should fix it one day

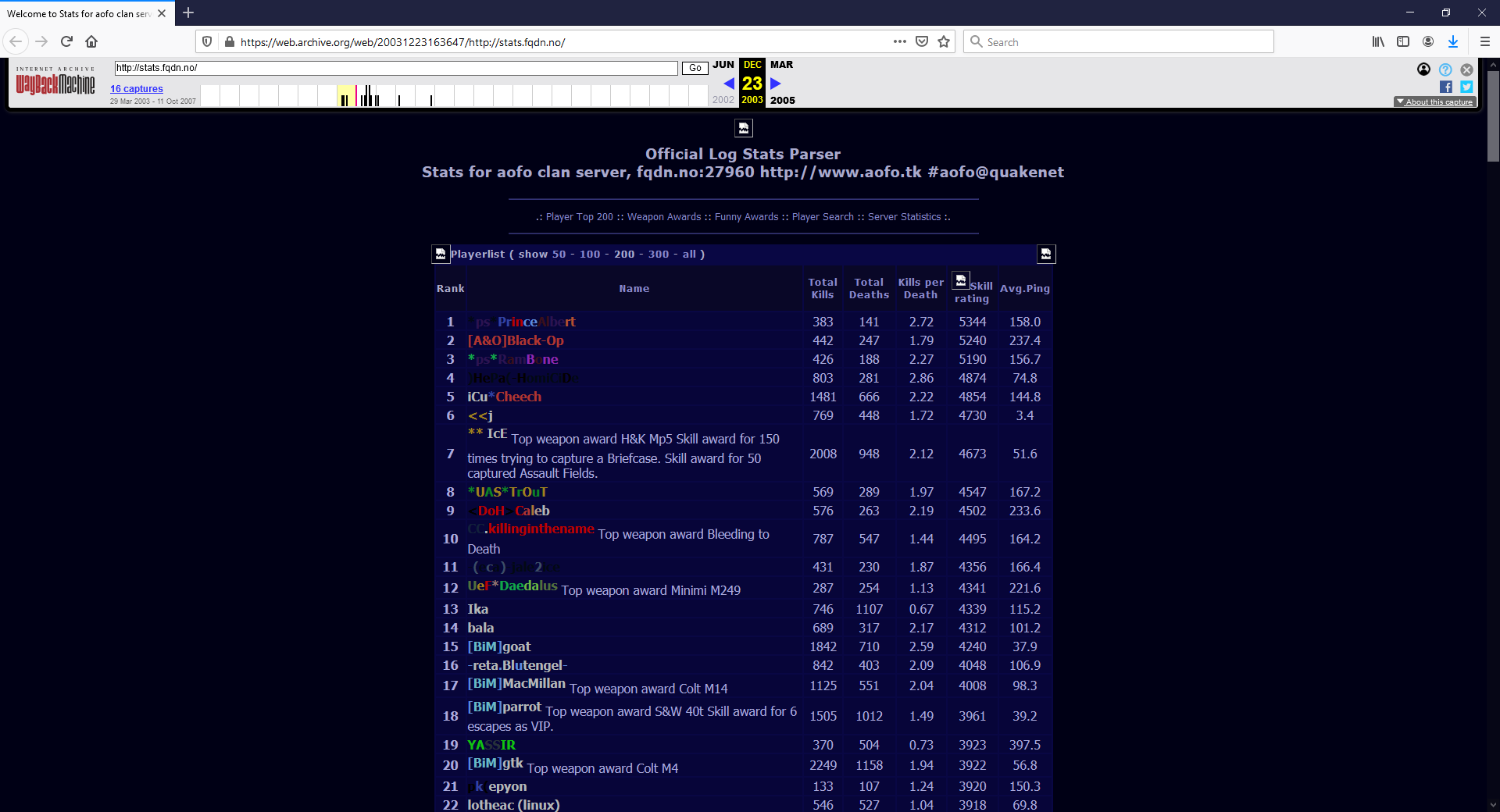

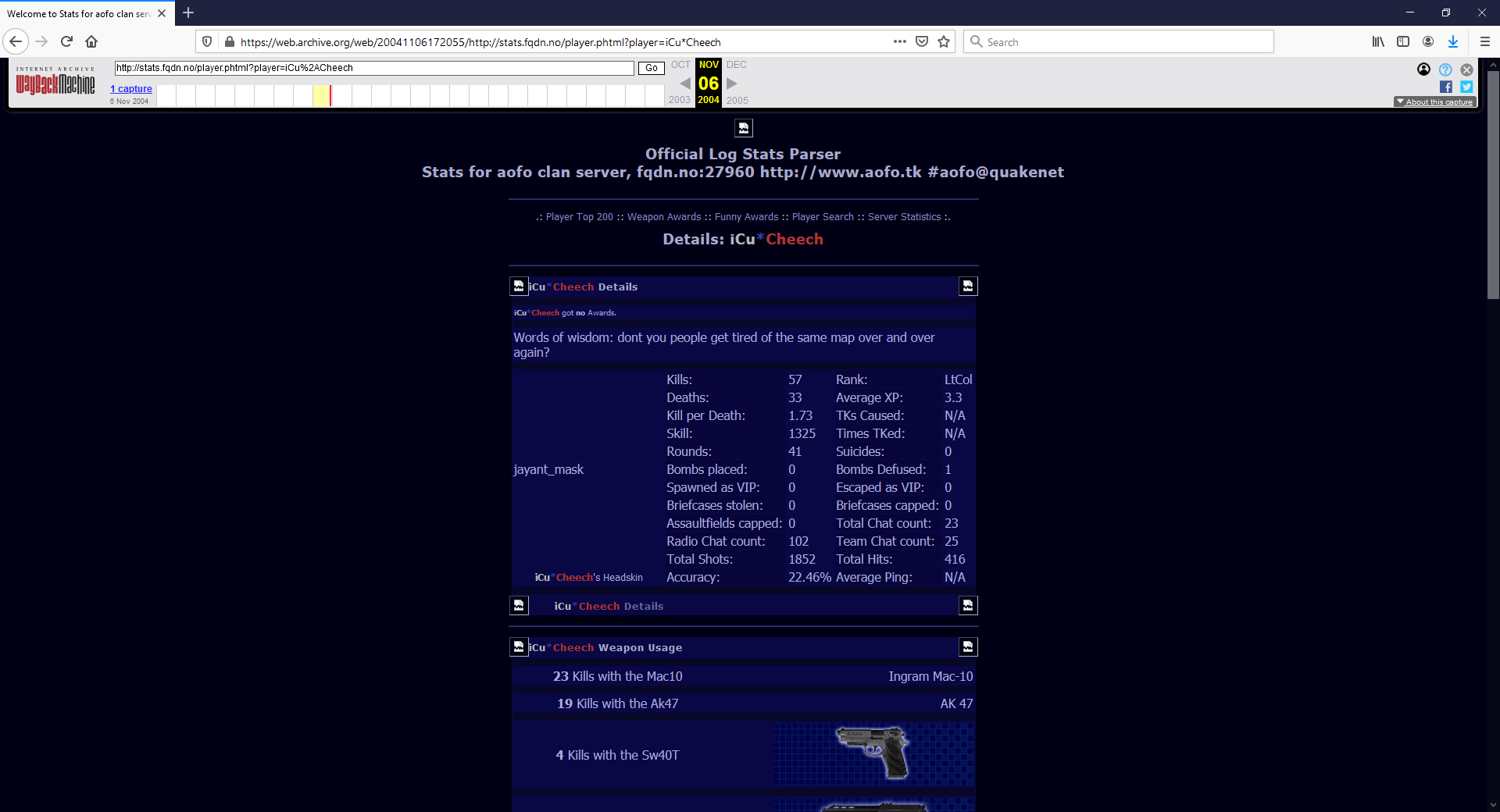

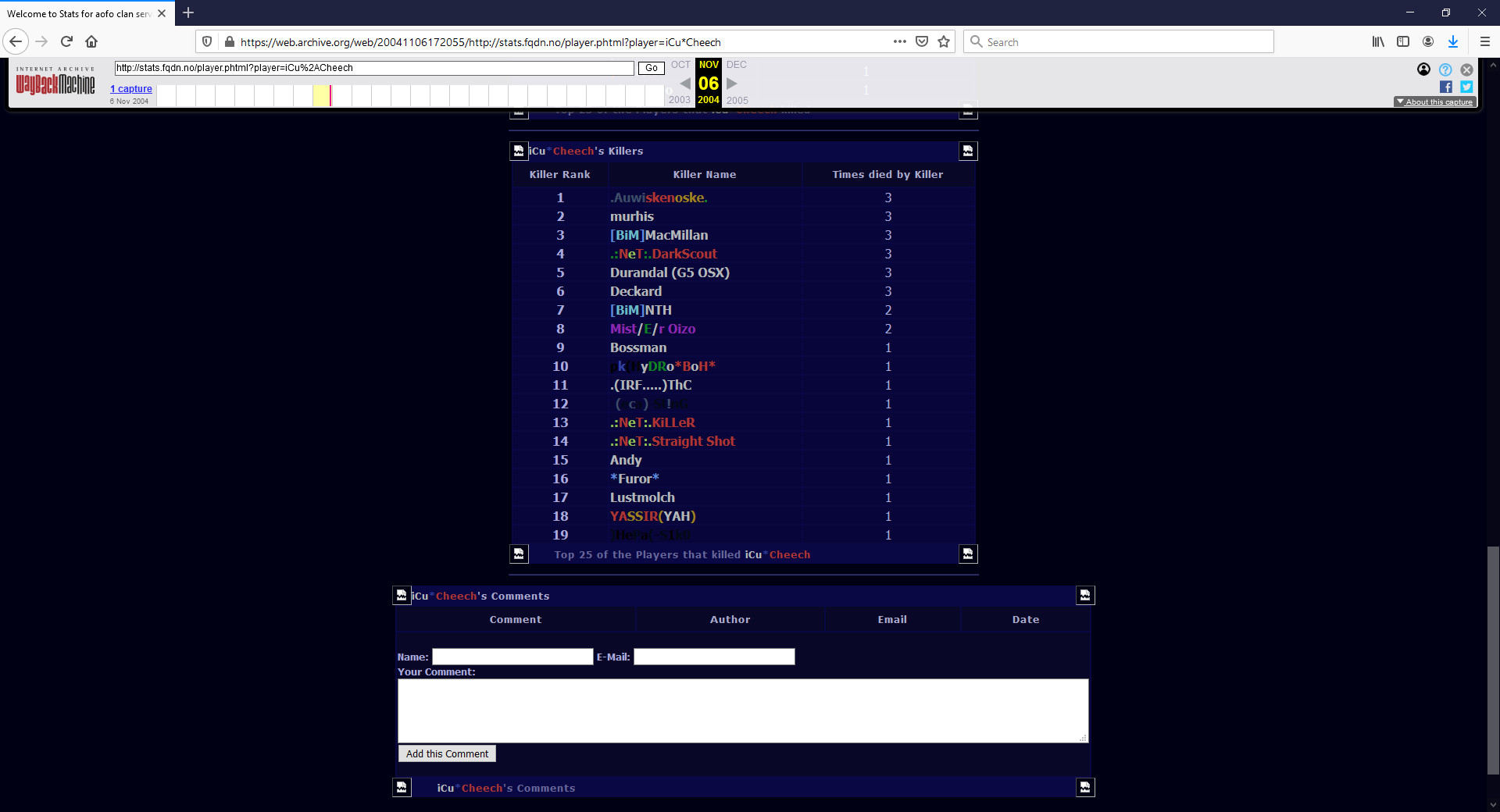

Download ircMonSealstats generates player rankings and stores statistical data from the Quake3 modification Navy Seals. Based on existing source code given to me by 'LostCause' who created the stats program for Urban Terror, another quake3 modification. I ported the code to work for navy seals and modified/extended existing functionality. A perl script parses the game log file in order to calculate statistics and inserts relevant data into a mysql database via the perl DBD module. PHP is then used to read from the database and display the statistical information. All of which happens in real-time as players play the game.

Upon asking for a logfile to help test the code, the developers of the mod took interest in the program and made it an official part of the navy seals modification. I delivered what I had and they took control of it from there. Images and html code were developed by democritis and other coding work was done by defcon-X.

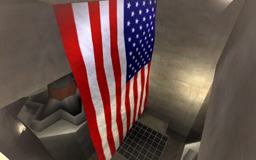

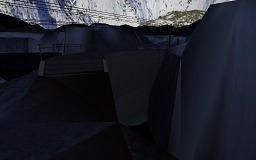

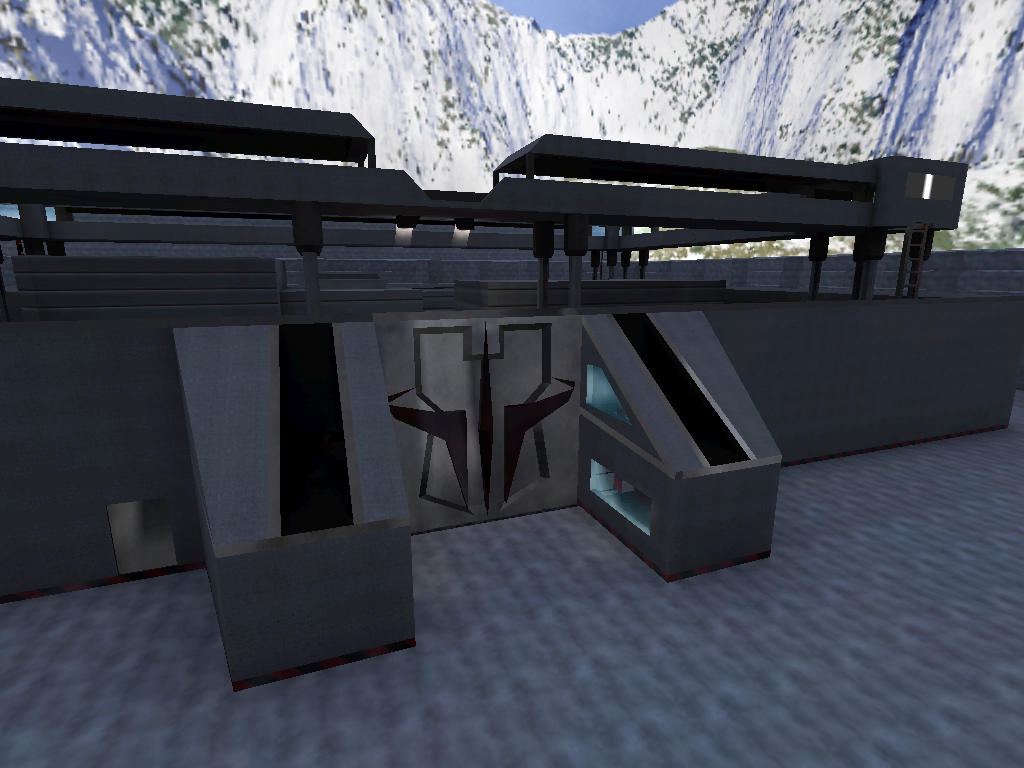

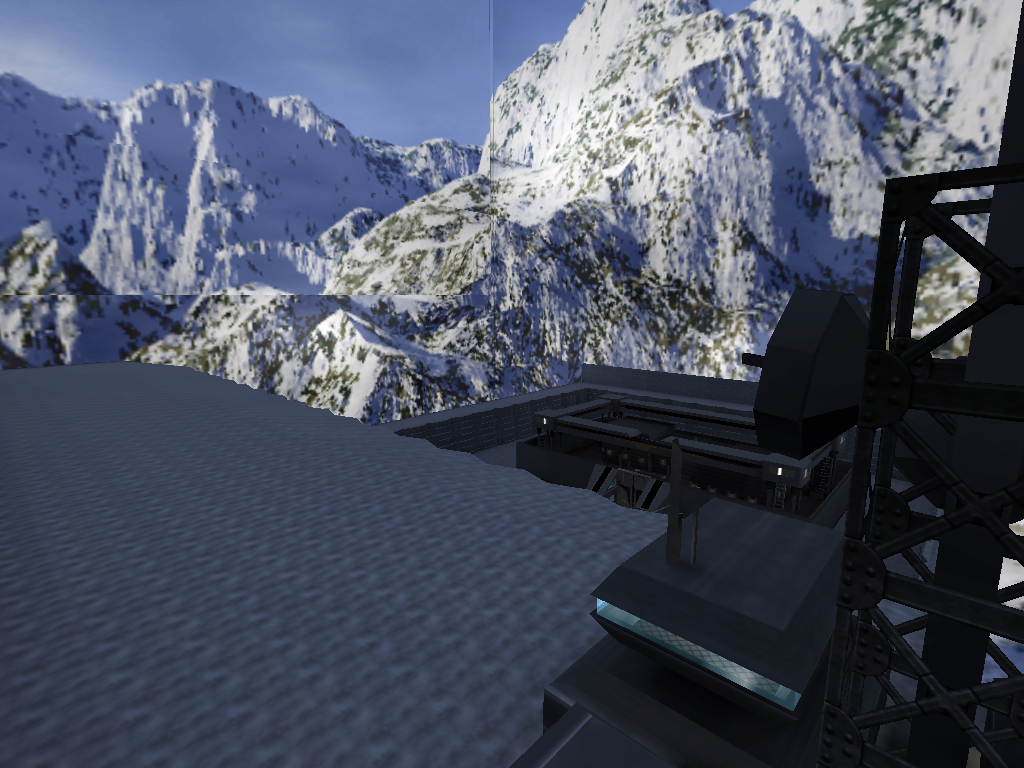

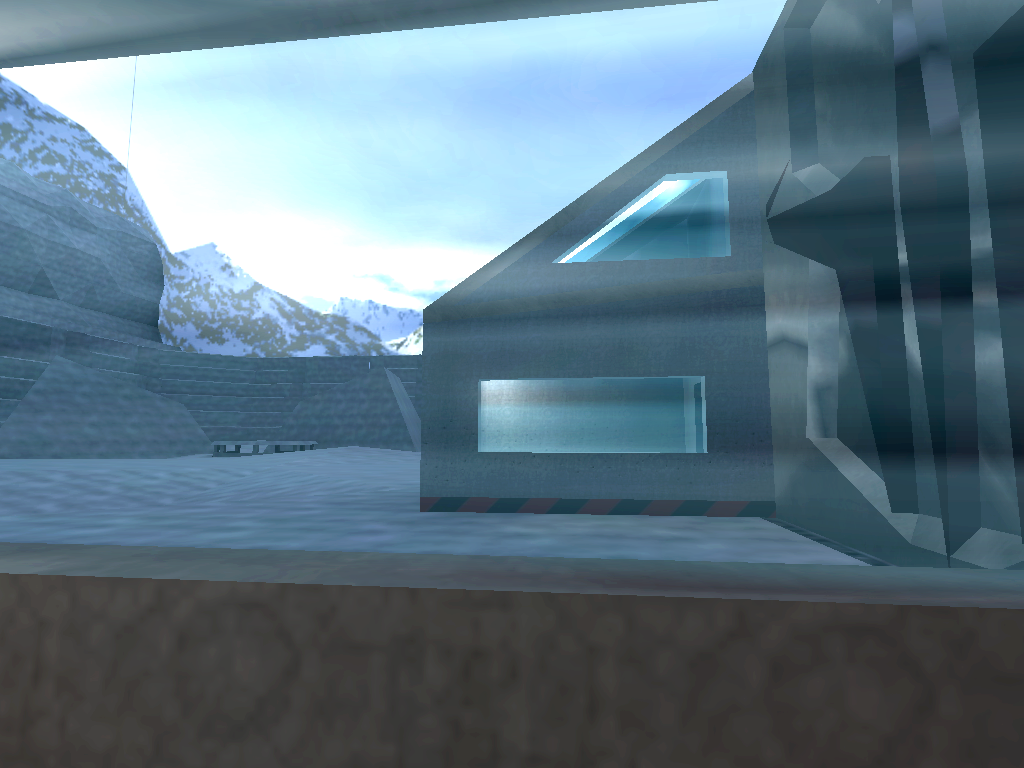

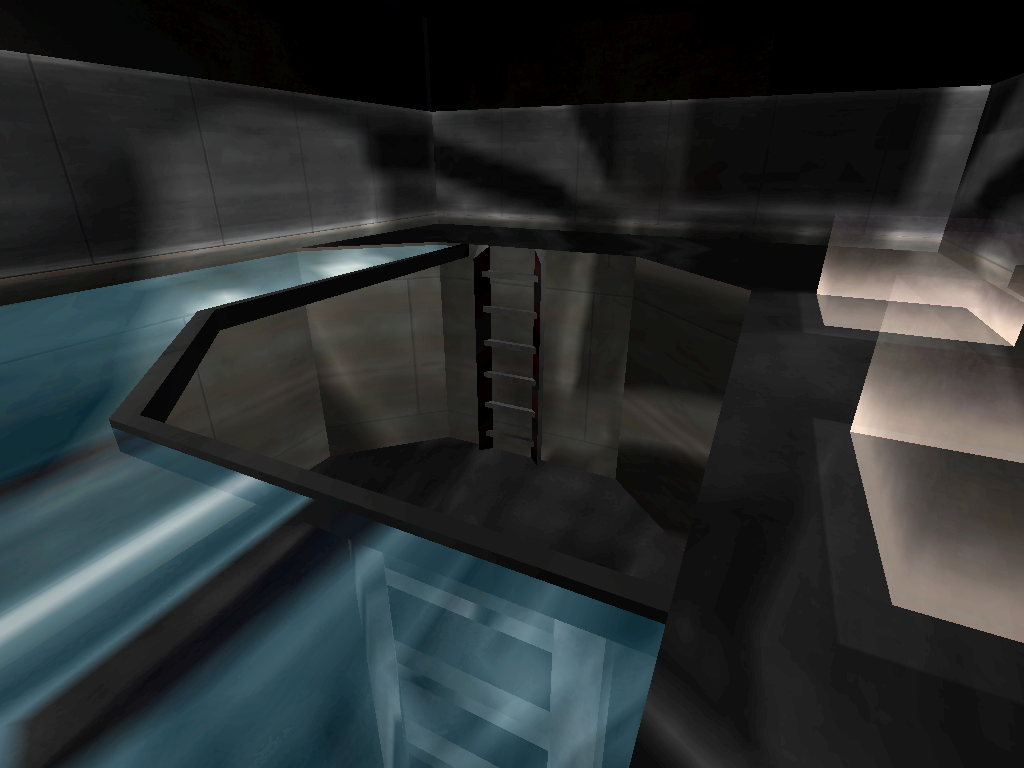

ns_dam is a map for the quake 3 modification navy seals that I made and never released. The files are too large to upload here, (now uploaded as of July 2016) but it is neat and shows some of my more artistic side. (or lack thereof) It has quake 3 team arena style terrain, terragen skybox, and just about every neat feature possible with q3map2 compiler. I did a quick compile of the map a looong time ago so the lighting isnt as perfect as it can be and the screenshots were taken quickly with texture settings set crappily. (mipmap nearest / picmip 1) Doing a good light compile can take days and odd lighting in places can make you have to restart the whole process. (While hoping the small changes you make to fix one thing doesnt break another.)

(pk3 files are really zip files, .map files are included for editing. I think it has some other maps I made in there too if you fire up q3radiant/gtkradiant, but just load the map with /devmap ns_dam)

Note: It does need mapmedia.pk3 from qeradiant and some assets from the navy seals mod (eg: snowy pine tree)

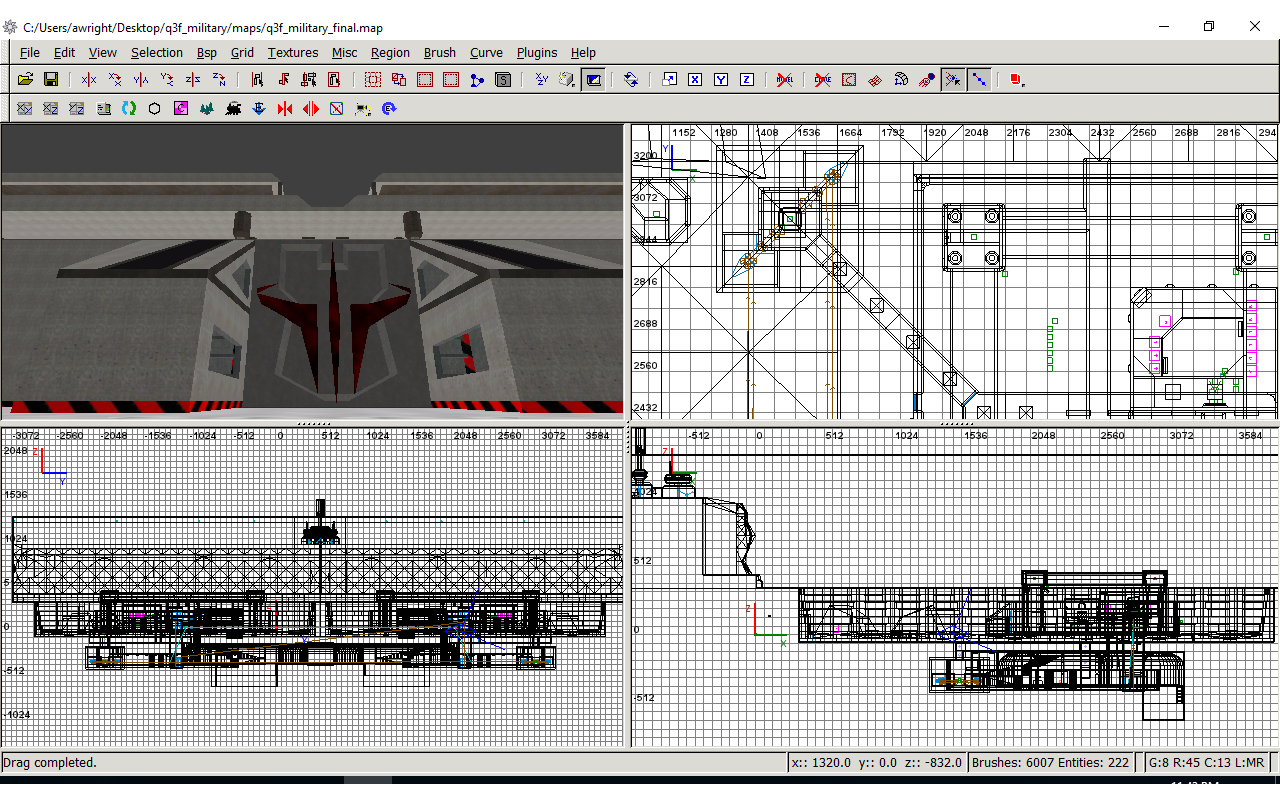

This is that "other map" that I was referring to, went ahead and bsp'ed it and packed it up nicely, intended for the quake 3 team fortress mod, q3f, run it with /devmap q3f_military

Good Reference videos for geometric interpretation:

3Blue1Brown intro

3Blue1Brown rotation

3Blue1Brown interactive

You can think of unit quaternions as a four dimensional sphere where each vector element is orthangonal and rotating in a loop.

The fourth dimension distorts spheres into planes due to projecting down to 3d space.

(Think of a point on a sphere with infinite radius, it is essentially a plane) and we have one sphere for each dimension. (real plane, x plane, y plane, z plane).

At each of the cardinal directions (1,0,0,0) (0,1,0,0) (0,0,1,0) (0,0,0,1) we have 3 planes and a unit sphere.

We limit ourselves to a unit quaternion to force our rotations to exist on a unit sphere in 3d. The fourth dimension extends to infinity in our 3d projection,

but in reality it is just rotating in a dimension we cannot visualize.

[I learned from Chris Hecker's tutorials, pixar's physically based modeling paper, and also a bit from Alan Watt's 3d computer graphics book]